Creating a Test Run

The instructions below describe how to create a test run with minimal requirements. Optionally, you can also configure advanced settings.

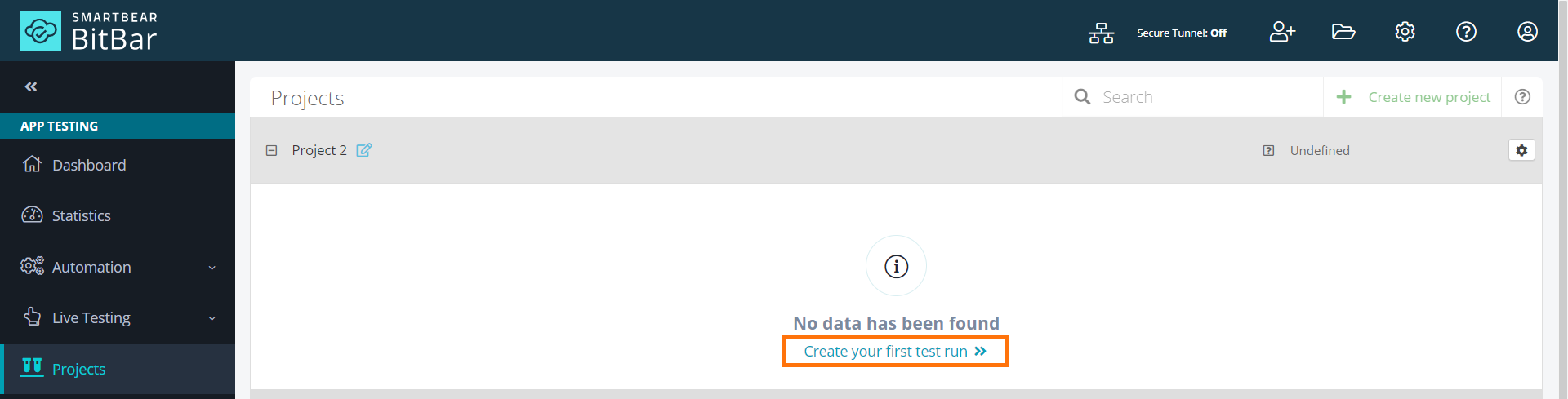

Click Projects in the left navigation menu, then expand your project in the Projects section.

If the project has no test runs, click Create your first test run.

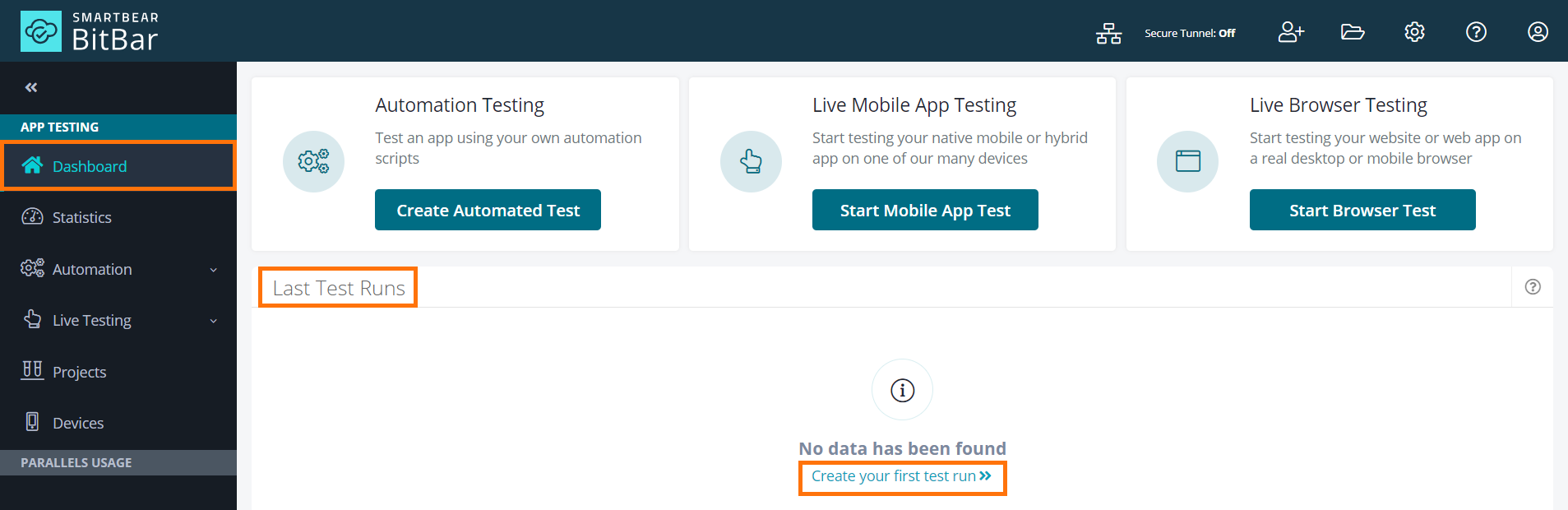

Alternatively, you can navigate to the Dashboard in the left navigation menu, and in the Last Test Runs section, click Create your first test run.

If the project has test runs, click Add new test run.

OR

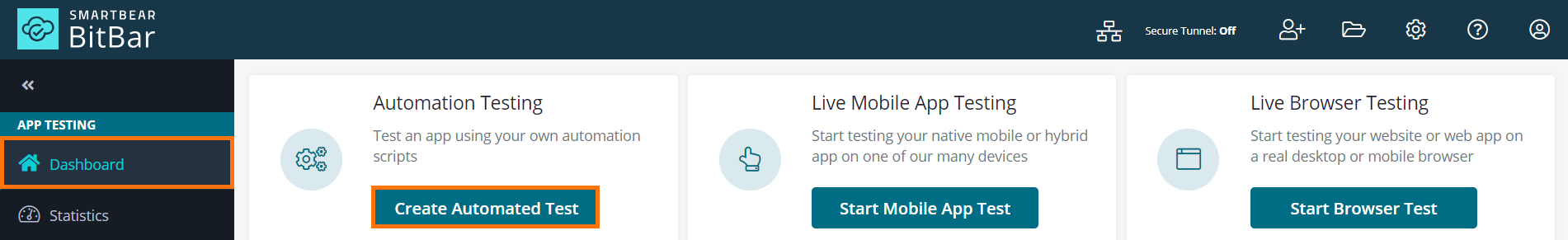

On the Dashboard, in the Automation Testing section, click Create Automated Test.

OR

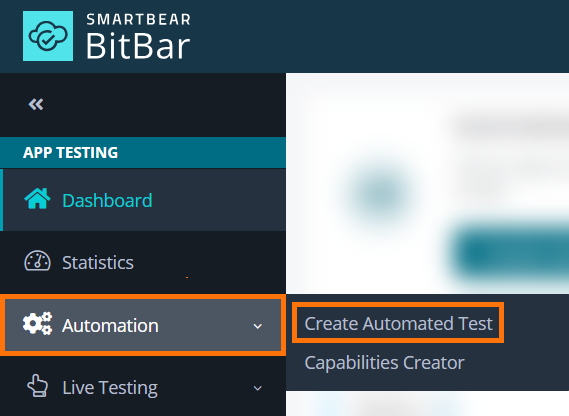

In the left navigation menu, select Automation > Create Automated Test.

Select a target operating system in the Select a target OS type step:

iOS – Select for testing iOS applications.

Android – Select for testing Android applications.

Desktop – Select for browser testing.

Depending on the previously selected operating system, select one of the available frameworks in the Select a framework step. Currently, the supported frameworks are:

Note

Depending on your cloud setup, the names and available frameworks may differ from the ones listed below.

Table 5. Supported Testing Frameworks across iOS, Android, and DesktopiOS

Android

Desktop

Appium iOS Client Side

Android Instrumentation and Espresso

Selenium Client Side

Server Side (Appium and other frameworks)

Appium Android Client Side

Desktop Cypress Server Side

XCTest

Server Side (Appium and other frameworks)

XCUITest

Flutter Android

Flutter iOS

Upload an application file in the Choose files step. You can upload up to three files.

Tip

If you do not have a test app file yet, click Use our samples to upload sample apps.

Choose an action to perform over the uploaded files (BitBar selects an action by default depending on the file type. You may change the selection if needed):

Install on the device – Apply this action to application package files (.apk on Android, .ipa on iOS). BitBar will upload the selected package to the device and install it there.

Copy to the device storage – Works for Android devices and .zip files only (iOS is not supported). BitBar will copy the specified .zip archive to the device and unpack it there. You can then use the unpacked files in your tests.

Use to run the test – BitBar will upload the file to the device. If the file is a

.ziparchive, BitBar will unpack it on the device. If you use the Android Instrumentation framework for test automation and upload a.apkfile, BitBar will use that file to run the test.

Select a device or a device group in the Choose devices step:

Use existing device group – Select this option if you want to use trial devices or have an existing device group.

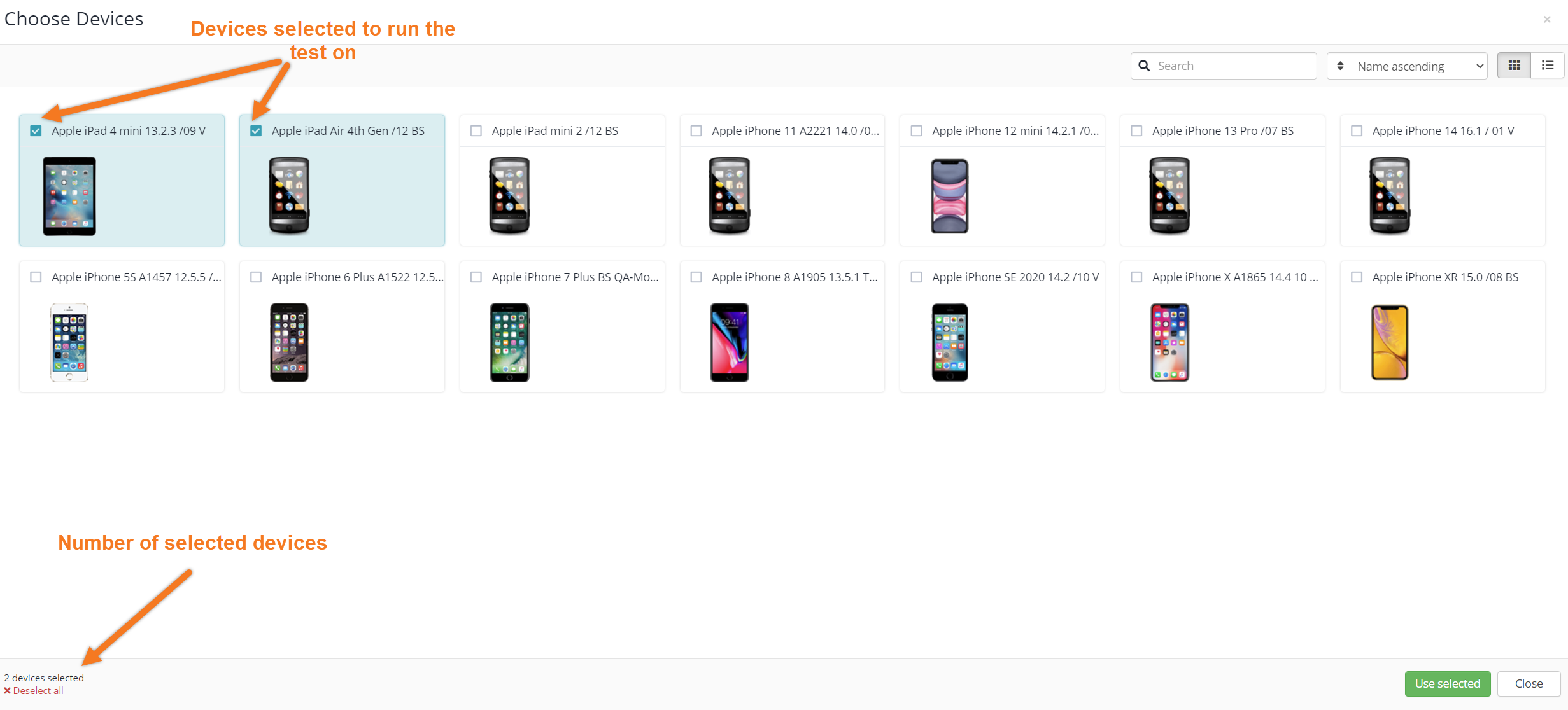

Use chosen devices – Select this option if you want to choose devices from the list of available devices. You can run your tests parallelly on multiple devices. To do that, click Click to choose devices and tick selected devices.

Run on currently idle devices – Select this option if you want to run your test on random devices that are not currently used.

Click Create and run automated test.

Advanced settings

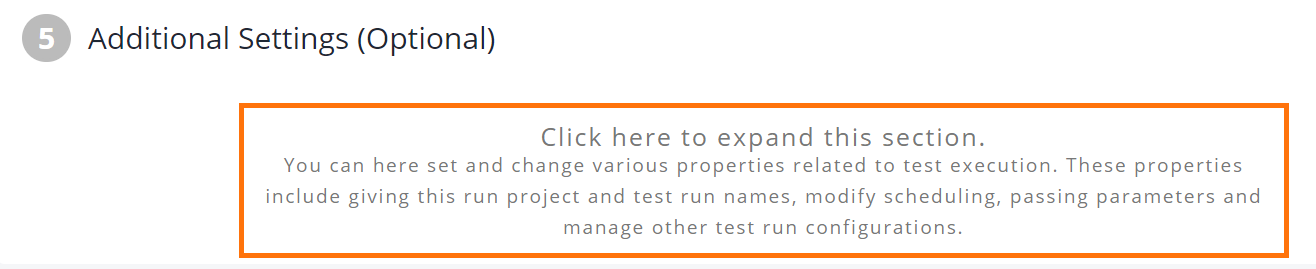

In addition to the basic settings described above, there are plenty of additional configuration options for the test run.

To open advanced settings, click the area under the Additional settings (optional) step.

|

Setting | Description |

|---|---|

Project name | The name of the project to store the test run. If the specified project name does not exist, a new project will be created. Omitting this option will create a new project with a default name, for example, Project 1. |

Test run name | The name of the test run that describes what you are testing with this test run, for example, a build number, fixed bug ID, date and time, and so on. |

Language | The language to be set on the selected devices before starting the test run. |

Test time-out period | The timeout of the test run start. The default value is 10 minutes. Possible values are: none, 5, 10, 15, 20, 30, and 60 minutes. |

Scheduling | Select how and when to run tests on the selected devices: simultaneously, on one device at a time, or on available devices first only.

|

Use test cases from | Applies to Android Instrumentation test runs. Select a test class of the package to be executed, if you do not want to run the whole test suite. |

Test case options | The test case options to be included or excluded. |

Test finished hook | As the test run is finished, it is possible to make a POST call to the specific URL at the end of the test run. Note that in addition to this hook URL, you can also use email or Slack integrations to get notified of finished test runs. |

Screenshots configuration | By default, screenshots are stored on the device's SD card at |

Custom test runner | The test runner to be used. The default value: |

Test user credentials | The user name and password combination that should be used during the AppCrawler test run. |

Custom test run parameters | Public Cloud supports a number of Shell environment variables that are made available to each test run. These can be used for test case sharding or selecting an execution logic for runs. For Espresso test sharding, variables Xcode-based test suites can be controlled with

In On-Premise and Private Cloud setups, users can create their own key-value pairs. For customers with advanced plans, it is possible to create keys for specific tasks to be done before, during, or after a test run also in Public Cloud. |

Disable Applications resigning | This setting refers to preventing the re-signing of applications during testing, ensuring they retain their original signature. |