BitBar Dashboard Overview

BitBar is a versatile cloud-based platform for testing mobile and web applications. With BitBar, you can conduct live manual testing or automated testing using any of the frameworks we offer support for. BitBar accommodates testing across desktop browsers (Windows, macOS, and Linux) and real iOS and Android devices.

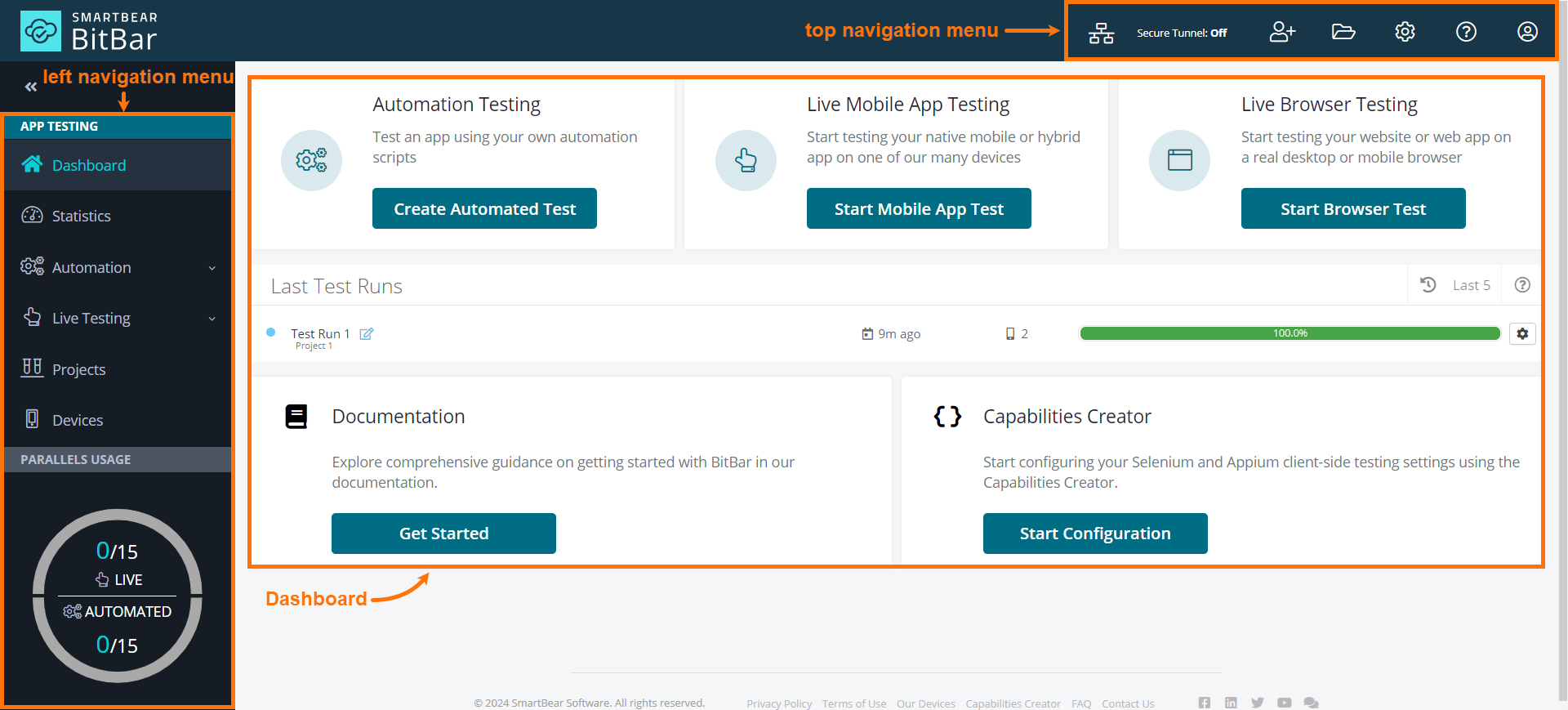

Once you log in, you will land on the Dashboard page.

Tip

Conveniently, you can access this page anytime from anywhere in the application using the left navigation menu.

The Dashboard page comprises three primary components:

|

Dashboard

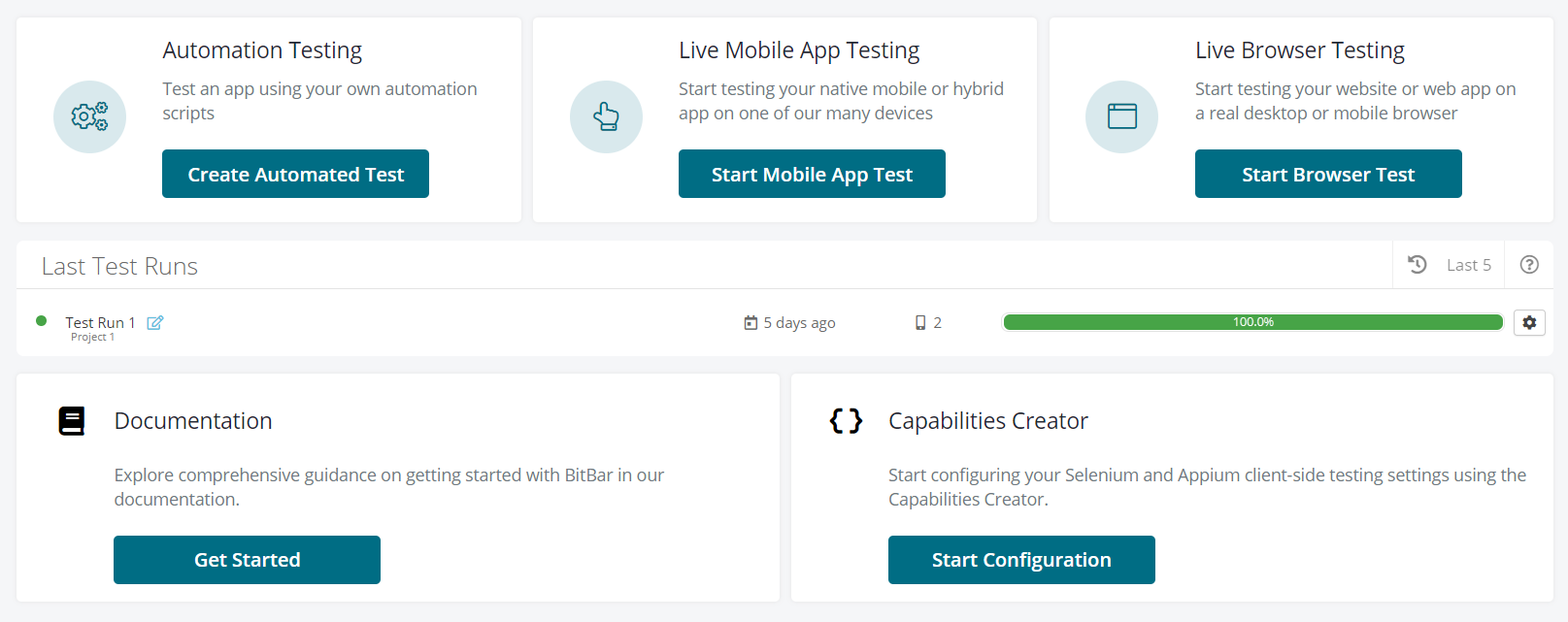

The Dashboard is your command center for all testing activities.

From the Dashboard, you can:

Create an automated test.

Start testing your native mobile or hybrid app on one of our many devices.

Start testing your website or web app on a real desktop or mobile browser.

View your last test runs.

Explore our documentation on getting started with BitBar.

Start configuring your Selenium and Appium client-side testing settings using the Capabilities Creator.

|