When you run your load tests, LoadNinja generates various charts and metrics and displays them on the Test Results screen. Use that screen to preview the statistics on your test runs and debug issues.

Open the Results screen

LoadNinja activates the screen right after you start a load test. During the run, the screen is displaying real-time data on test execution. It remains active after the run is over, so you can easily view the final results.

To view results of previous test runs, you can do any of the following:

-

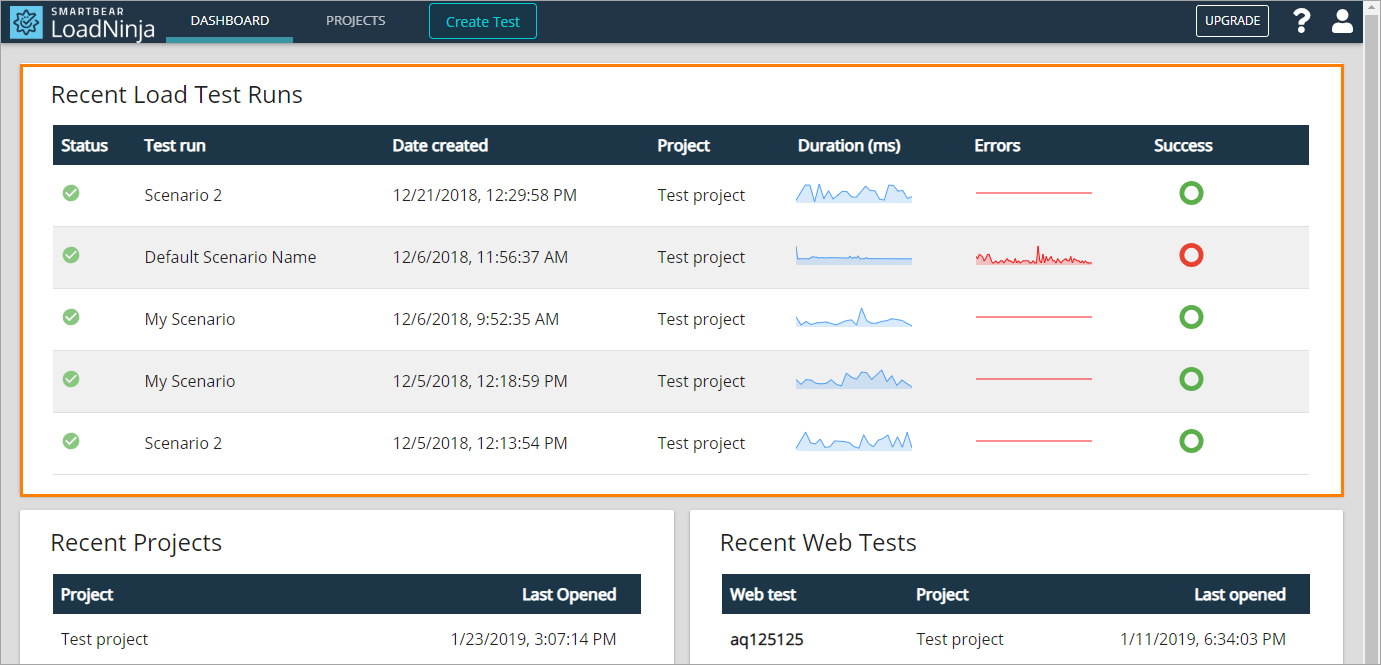

Open results of a recent test run from the Dashboard:

– OR –

-

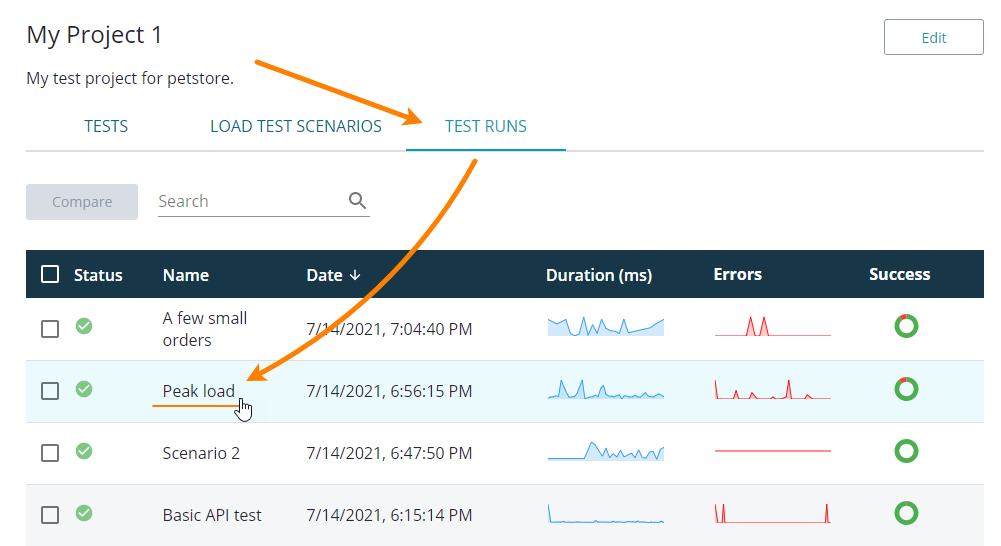

View results from the Projects > Test Runs list.

Results screen

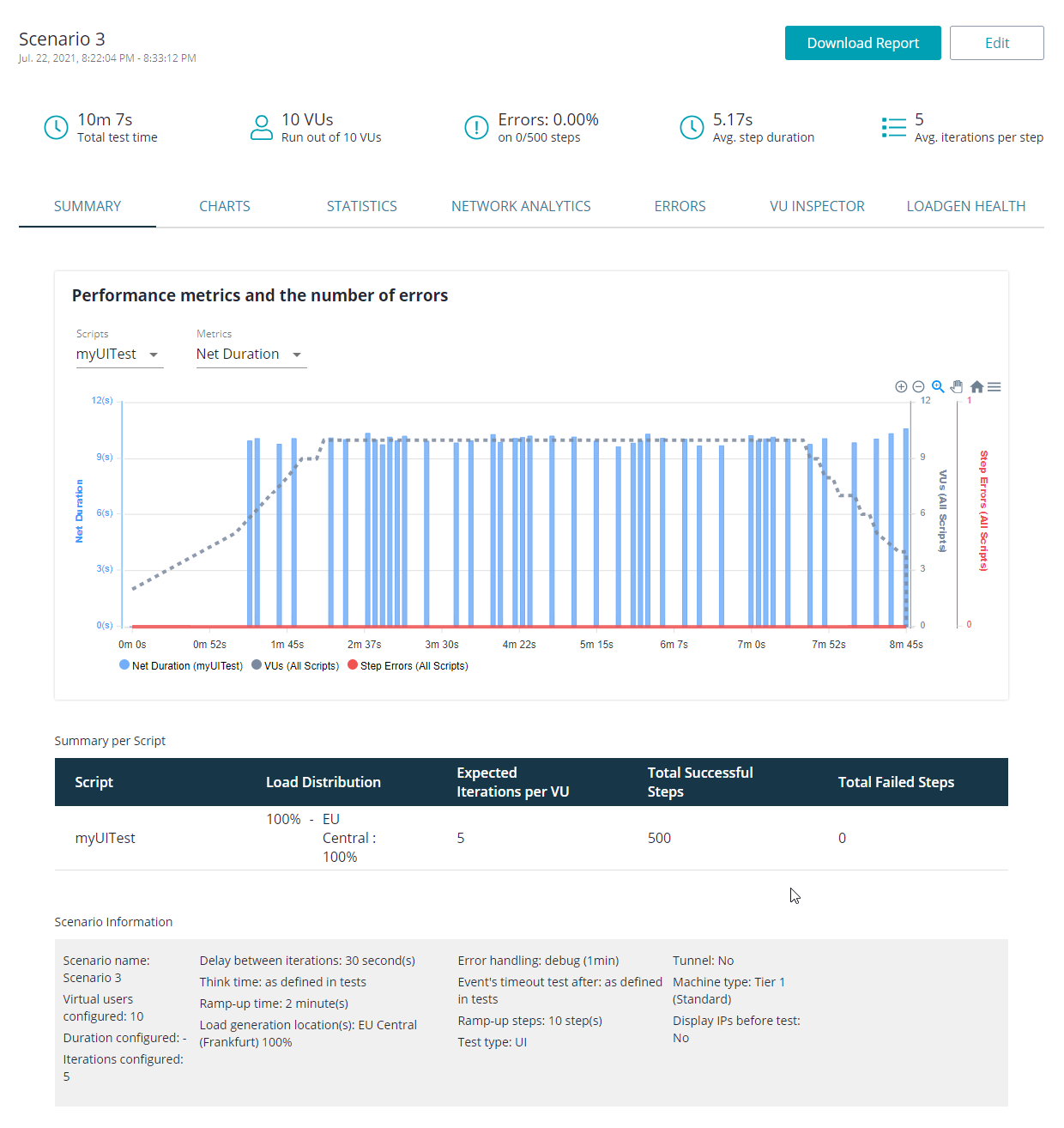

Here is a sample view of the screen:

| Tab | Description |

|---|---|

|

Active by default. Provides brief overview of the test run and accumulated results and metrics. See the tab description for information on the chart and metrics in it. |

|

|

Statistics on various aspects of the tested web app. |

|

|

Information on timings and failure types. |

|

|

An entry point for the built-in AI analyzer to help you find possible network issues. |

|

|

Information on errors that occurred during the test run, and provides an entry point for virtual user debugging. |

|

|

Live preview of remote desktops of cloud computers, where virtual users are running. |

|

|

Performance metrics received from LoadNinja servers to help you find the cases where performance issues have been caused by the test infrastructure. |

See instructions

See instructions

Download (export) results

Download (export) results