Supported Actions

Unlike other record-and-playback tools, you'll never have to manually enter a test step in Reflect. Instead, our test recorder is capable of automatically detecting a wide array of user actions, letting you create a regression test simply by interacting with your website.

Important

This section covers the supported actions for web testing. If you are interested in mobile testing, check Supported Actions in Reflect Mobile.

Clicks

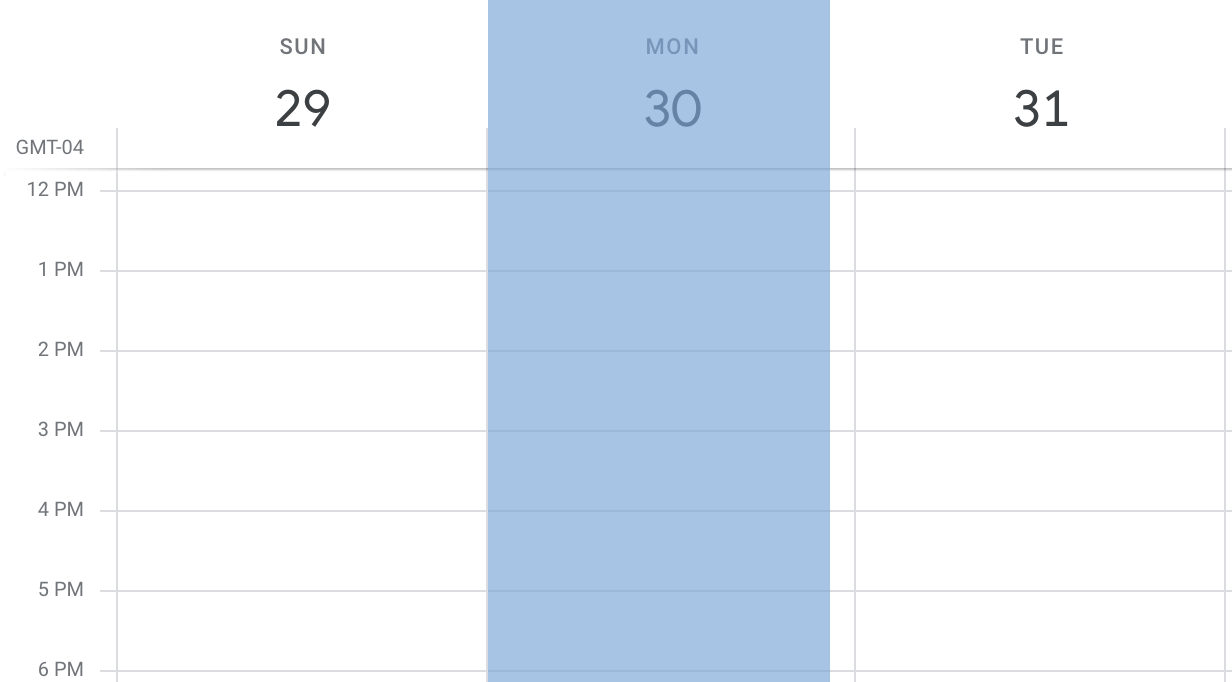

Among the most common of recorded actions are clicks. When recording a test, Reflect detects the element that was clicked as well as its (x,y) coordinates relative to the top-left of the clicked element. This enables us to accurately record scenarios in which the exact location of the click is important. One such example is Google Calendar. Within Google Calendar, each day within the “Week” calendar-view is represented by a single DOM element. This means that to accurately record a test wherein a meeting is created at a specific time of day, a click must be executed at the proper (x,y) coordinates representing that time of day.

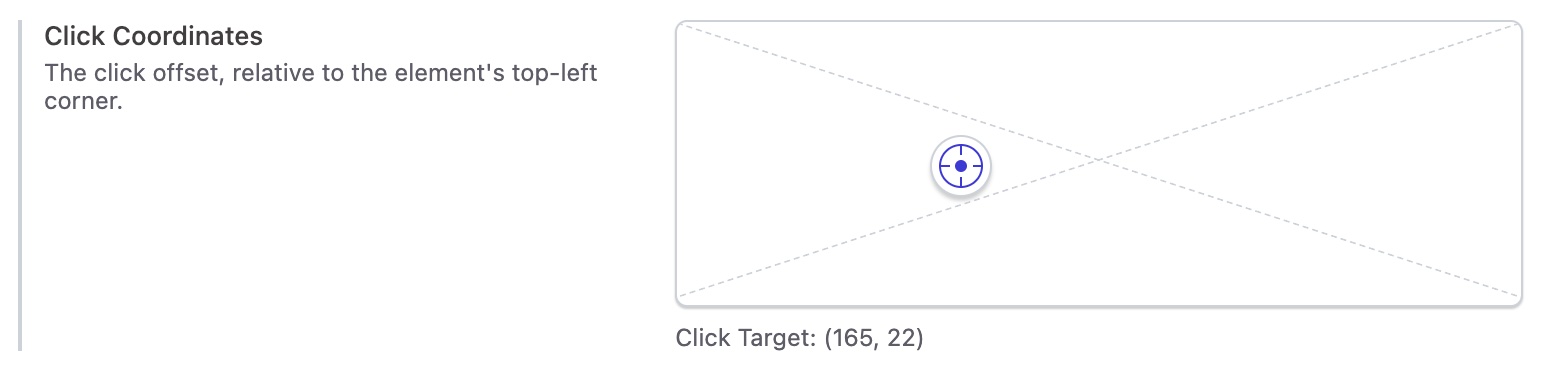

Click coordinates for a given test step can be viewed within the Test Step Detail view for either a previous test run or an active recording.

In addition to normal left-clicks, Reflect also includes accurate detection and playback of right-clicks and double-clicks.

Mobile Taps

Mobile web browsers will fire touch events rather than click events when users tap on an element on the page. For this reason, in mobile testing scenarios Reflect will leverage touch event APIs to record and execute taps instead of clicks.

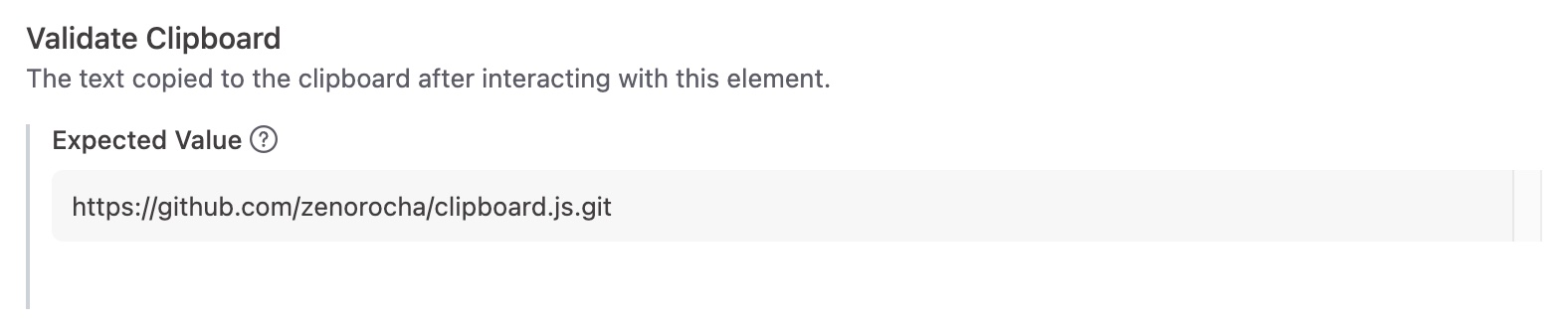

Copy to Clipboard

If a click results in content being copied to the clipboard, Reflect will capture and display the copied contents. If on a subsequent test run the click does not result in a clipboard-copy, or if the clipboard contents do not match the expected value, the test will be marked as failed.

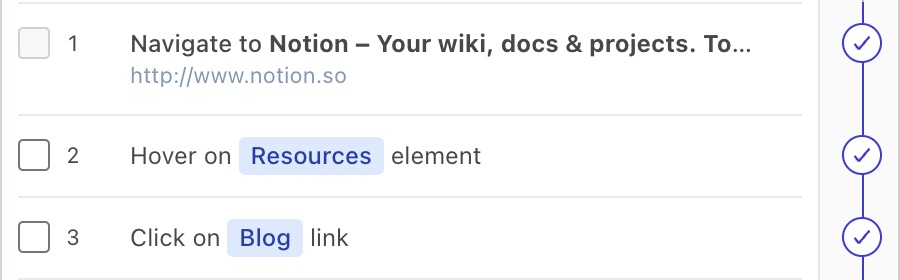

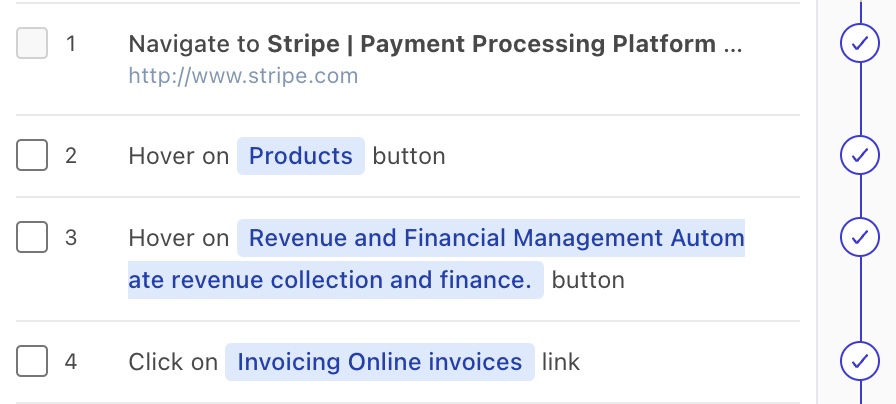

Hovers

Reflect detects hover actions automatically, without user input, and only includes the hover actions relevant to the test. As you record your test, Reflect monitors for element hovers that are followed by other actions such as clicks and text inputs. When a relevant hover action is detected, it is automatically inserted into the recorded set of test steps.

Consecutive hovers, such as hovering through primary, secondary, and tertiary navigation screens before clicking on an element, are also supported.

Reflect detects both CSS-based hovers (e.g. :hover) and Javascript-based hovers, regardless of the application’s JS framework.

In cases where Reflect’s automatic hover detection is not working as desired, you can use an AI step or a JavaScript step to interact more explicitly with the target elements.

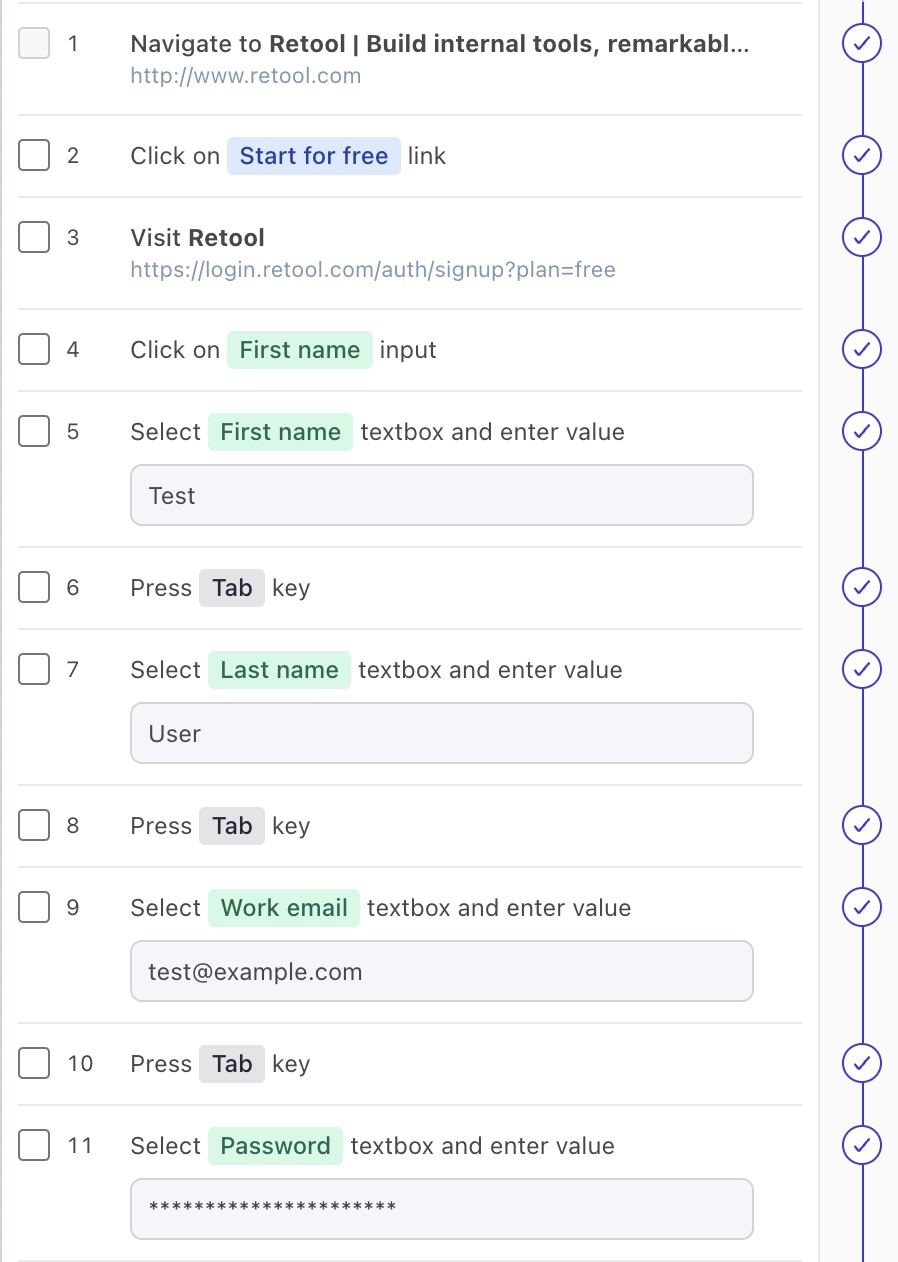

Form Entry

Reflect detects interactions with all form entry elements, including checkboxes, radio buttons, dropdowns, text-areas, input boxes, and buttons. The metadata associated with each form element depends on the type of form element under test.

On the left sidebar, form entry test steps are organized under their common form parent (or under the body tag if no <form> tag is present).

WYSIWYG Elements

Edits to WYSIWYG elements (enabled via the contenteditable attribute) are detected and replicated in the Reflect test runner. These elements are typically outside of a <form> element and so are recorded as standalone test steps.

The ‘value’ property

Most form elements contain a value property, whose meaning depends on the type of form element being interacted with. As such, Reflect will treat the value of a form element differently depending on what element is under test.

With form elements such as <input type="text"> and <textarea>, the value represents the text that’s visible within the element, and so Reflect will populate this value in the Expected Text section.

With form elements like <input type=checkbox>, the value represents an internal value that is not visible on the page, and so in this case Reflect will populate the value in the Attributes section.

Lastly, the <input type="file"> does not have a meaningful value and the <input type="image"> does not define a value property at all. We ignore the value property entirely for these form elements.

Scrolling

User-initiated scrolls are detected and will be exactly replicated in your tests. Scrolls that occur automatically (such as visiting a link with a # fragment in the URL) are not initiated by the user and thus will not be recorded. Similarly, scrolls that occur indirectly as a result of a user action (such as clicking on a ‘Back to Top’ button) are not recorded as they are initiated from a previously recorded action (in this case, clicking ‘Back to Top’).

In addition to scrolls that occur on the main document, Reflect detects and records scrolls inside scrollable child elements.

Finally, Reflect supports and enables scroll into view by default on all test steps. This feature detects when elements are on the page but out-of-view, and scrolls the element into view automatically before interacting with it.

Keypresses

In addition to detection of keypresses within input text elements, text-areas, and contenteditable WYSIWYG elements, Reflect also detects other types of keypresses that change the state of the page. This includes Tab or Shift+Tab keystrokes to change the focused element on the page, as well as any keyboard shortcuts implemented by the page authors.

Note

Reflect currently restricts keypresses to either a single key, or a combination of Shift + an arbitrary key. Other keyboard combinations are currently blocked in the recorder and are not supported.

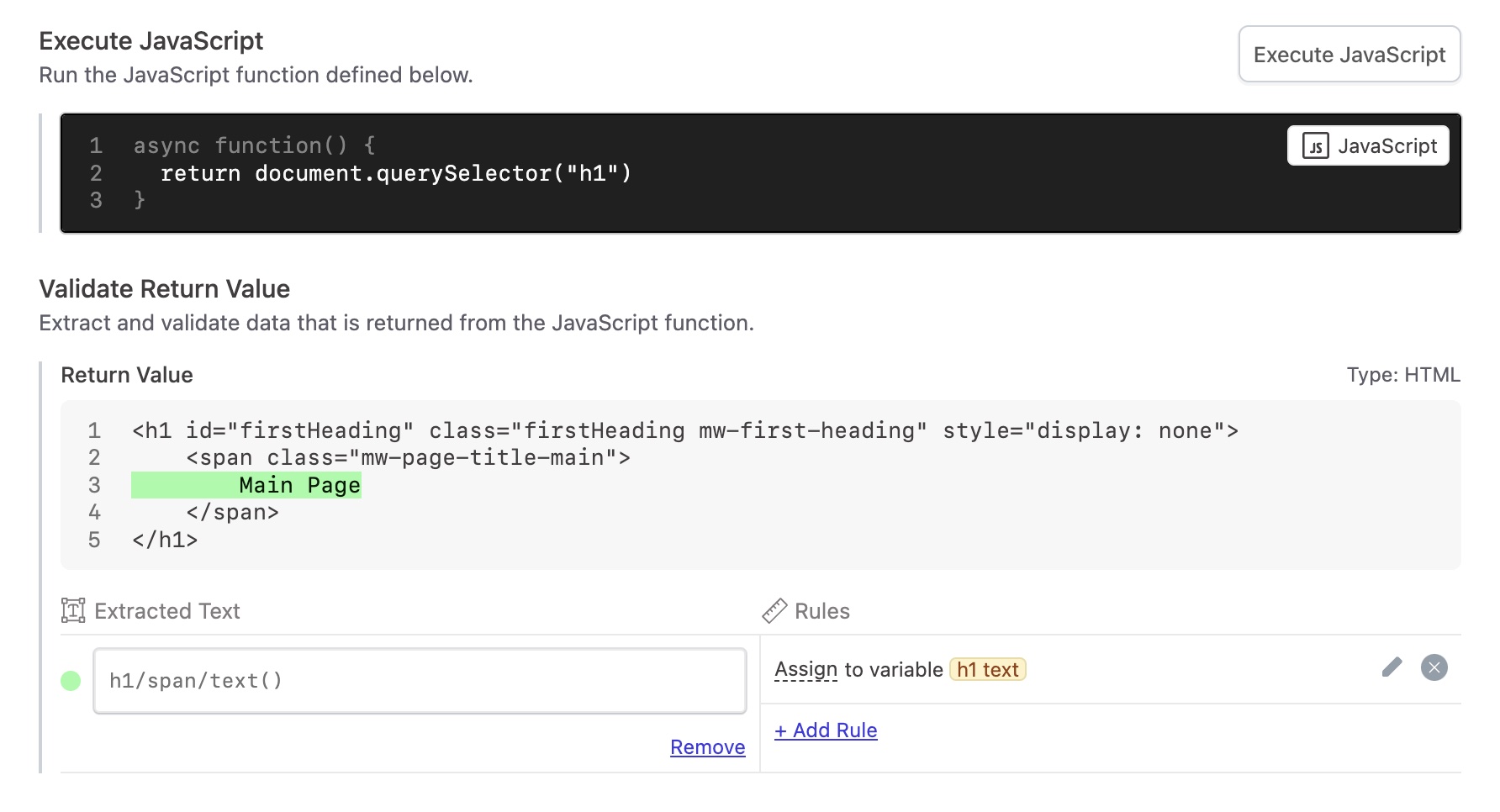

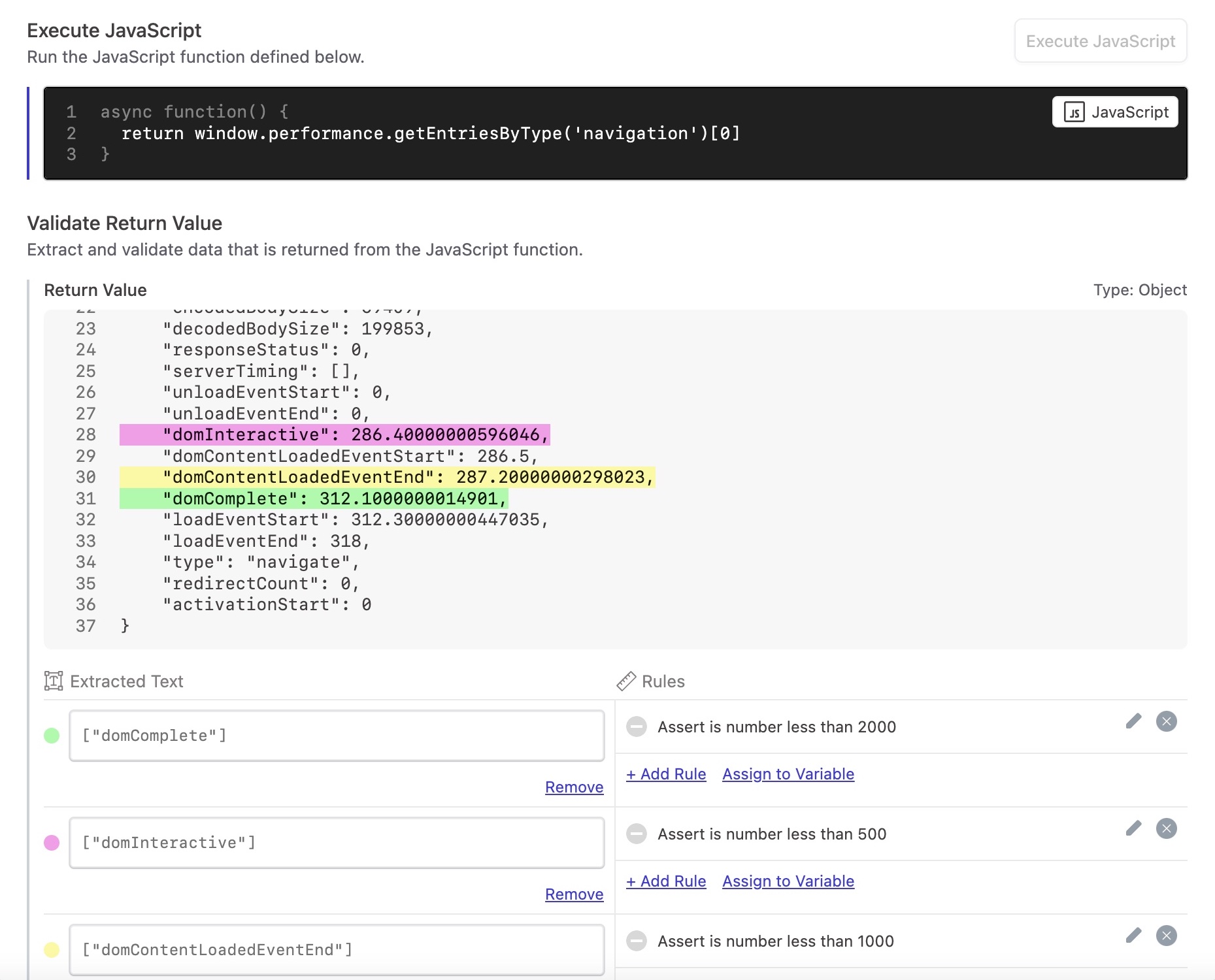

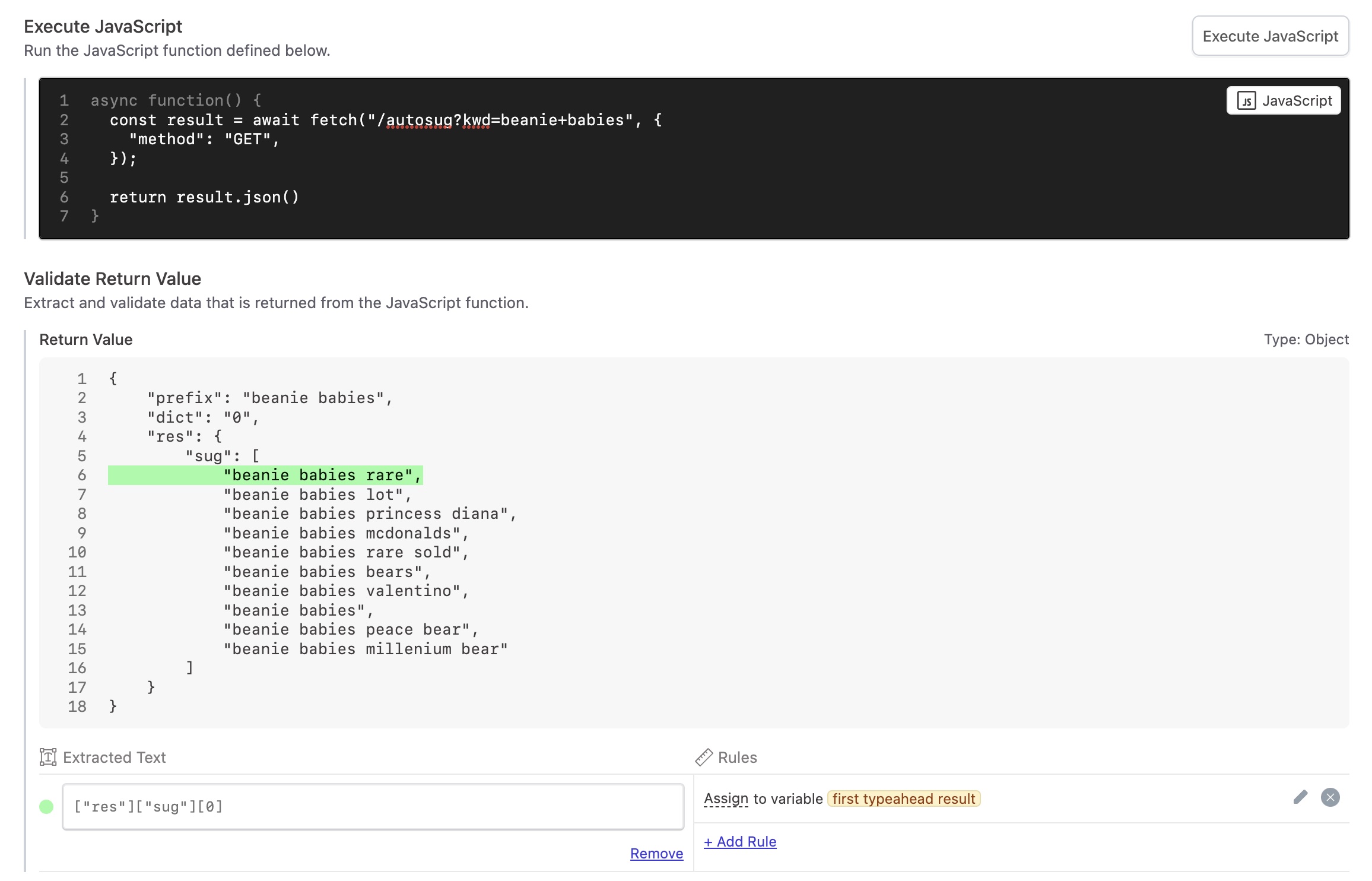

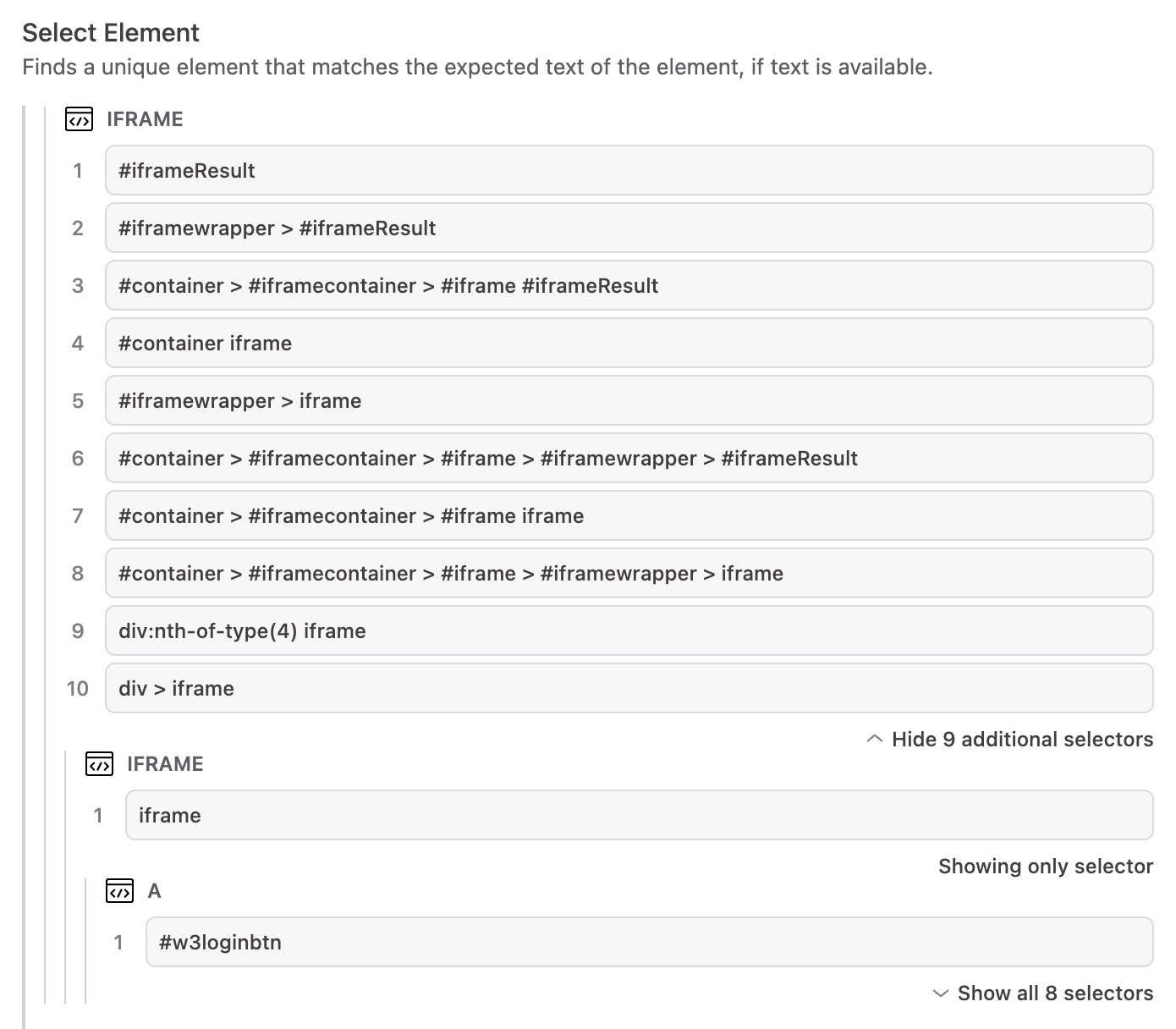

Execute JavaScript

For cases where you need to drop into code, Reflect supports executing arbitrary synchronous or asynchronous JavaScript code within the context of the browser session, and adding assertions on the resultant value. To add a code-based step, start by clicking the Execute JavaScript button in the recording view.

In most cases, code-based steps should return a single value so that the results can be asserted. The returned value is not asserted against by default. However, just like other test steps in Reflect, you can assert against the resultant value or assign the value to a variable via a point-and-click interface. If the resultant value is an HTML object or a JavaScript object, you can extract and assert on individual nodes / properties within that value.

Use-cases for a code-based step include:

Extracting an authentication token out of cookies / LocalStorage / SessionStorage for use in subsequent API steps.

Verifying that an element is NOT present on the page (e.g. return

document.querySelector(".error-message") and asserting that the result is empty).Retrieving front-end performance metrics (e.g. return

window.performance.getEntriesByType('navigation')and asserting on the desired results).While explicit waits are not recommended, for edge cases you can add one to a test with a Promise

// Wait for 2 seconds

return new Promise((resolve) => {

setTimeout(resolve, 2_000)

})Example: Extracting the H1 tag from the current page and assigning its text value to a Variable

Example: Extracting front-end performance metrics and asserting that page load times are within acceptable bounds

Example: Executing async API calls within the context of the browser session

Drag and Drop

Drag-and-drop (DnD) actions recorded in Reflect will playback in a test using the same mouse gestures used in the original recording. This allows for accurate recording of complex DnD actions of arbitrary length and duration. In the example below, Reflect is able to create a pixel-perfect replica of the following image created in an online MSPaint-like app:

Reflect’s drag and drop detection works for both native HTML5 DnD implementations and Javascript-based DnD libraries, including popular libraries such as:

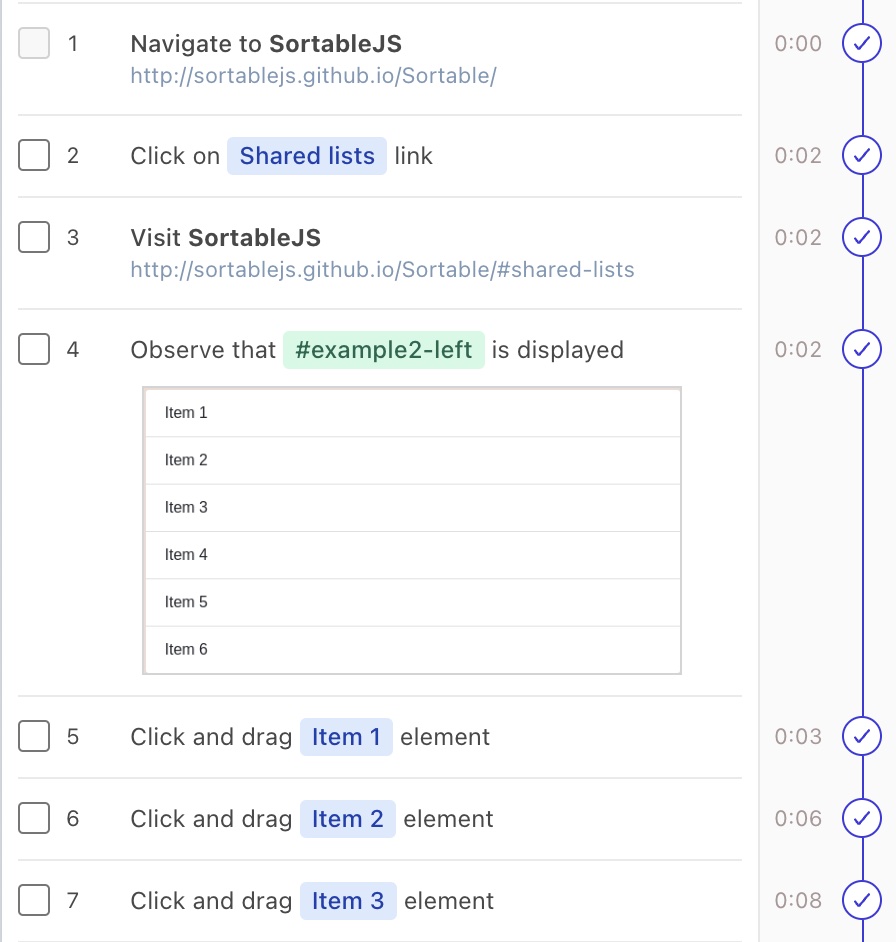

Click and Drag

The same logic that powers drag-and-drop support also enables you to test click-and-drag behavior, which is a different but related action. Supported click-and-drag examples include:

Resizing a textarea

Moving a slider to a different position

Note

To preserve test fidelity, the ability to click-and-drag to select text is disabled within Reflect recording sessions.

Swiping

For mobile tests, Reflect will detect and replicate swipes using the same detection logic underpinning drag-and-drops and click-and-drags. Examples of swipe-enabled elements include:

Mobile carousels

Slide shows

“Cover Flow” effects

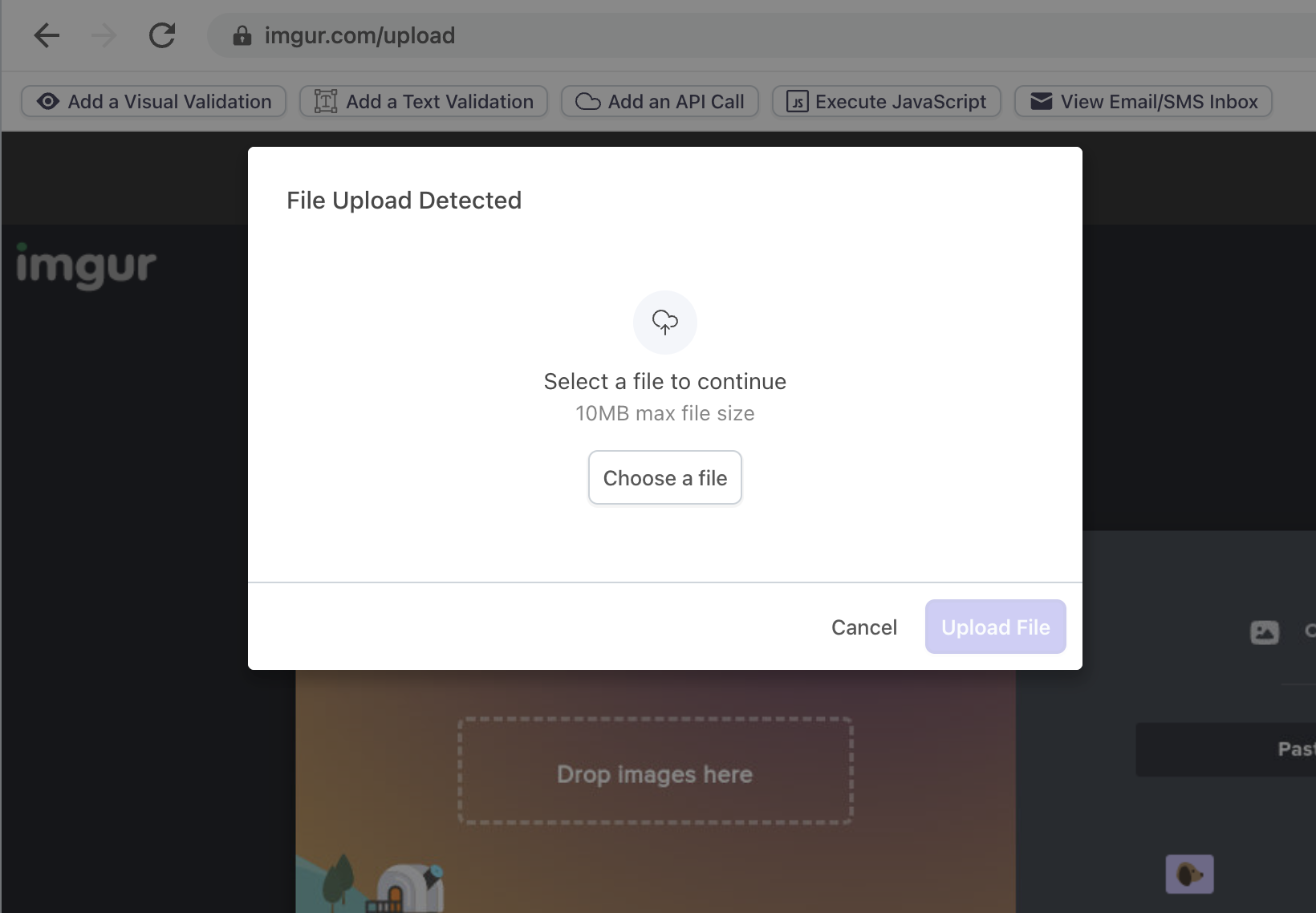

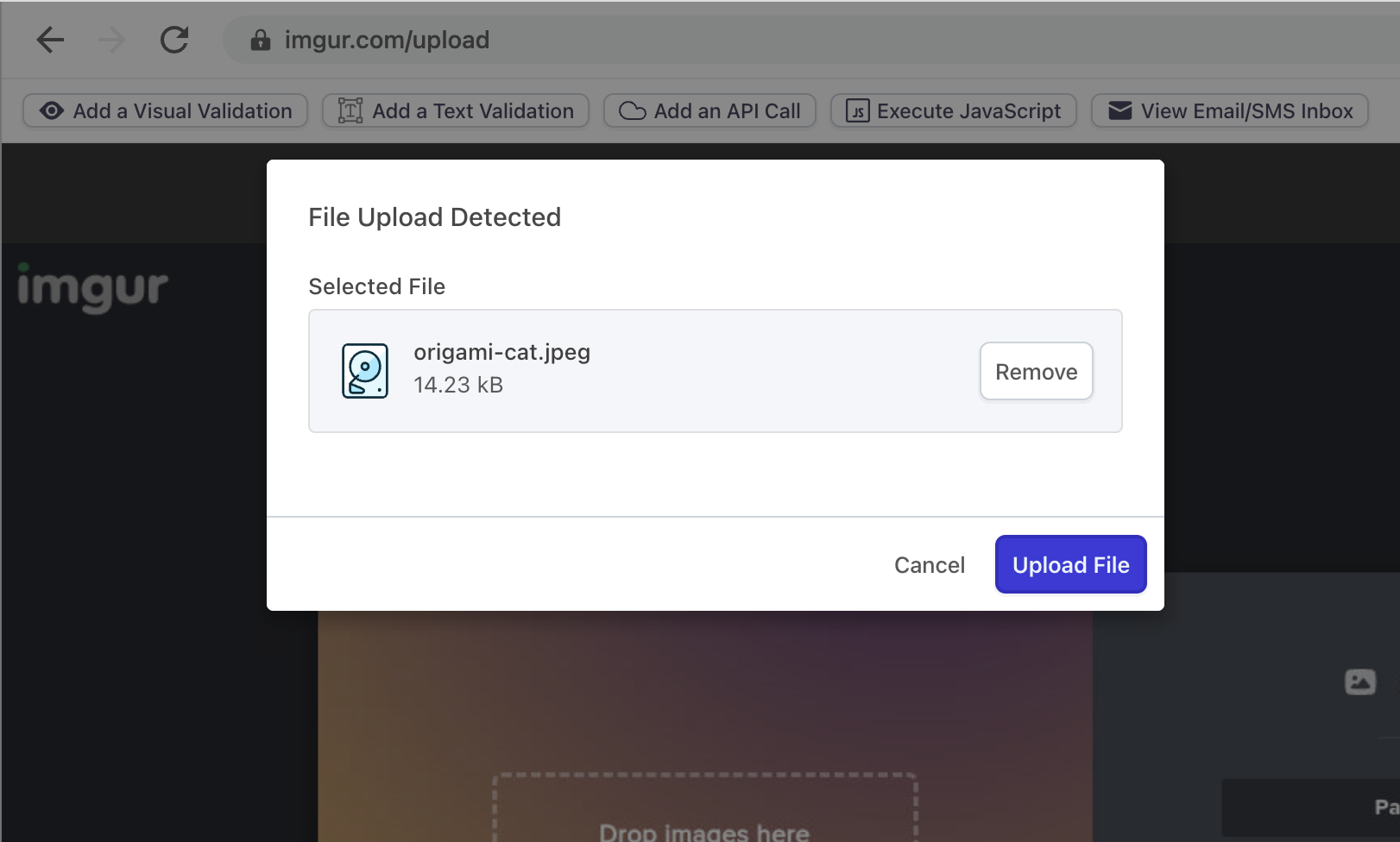

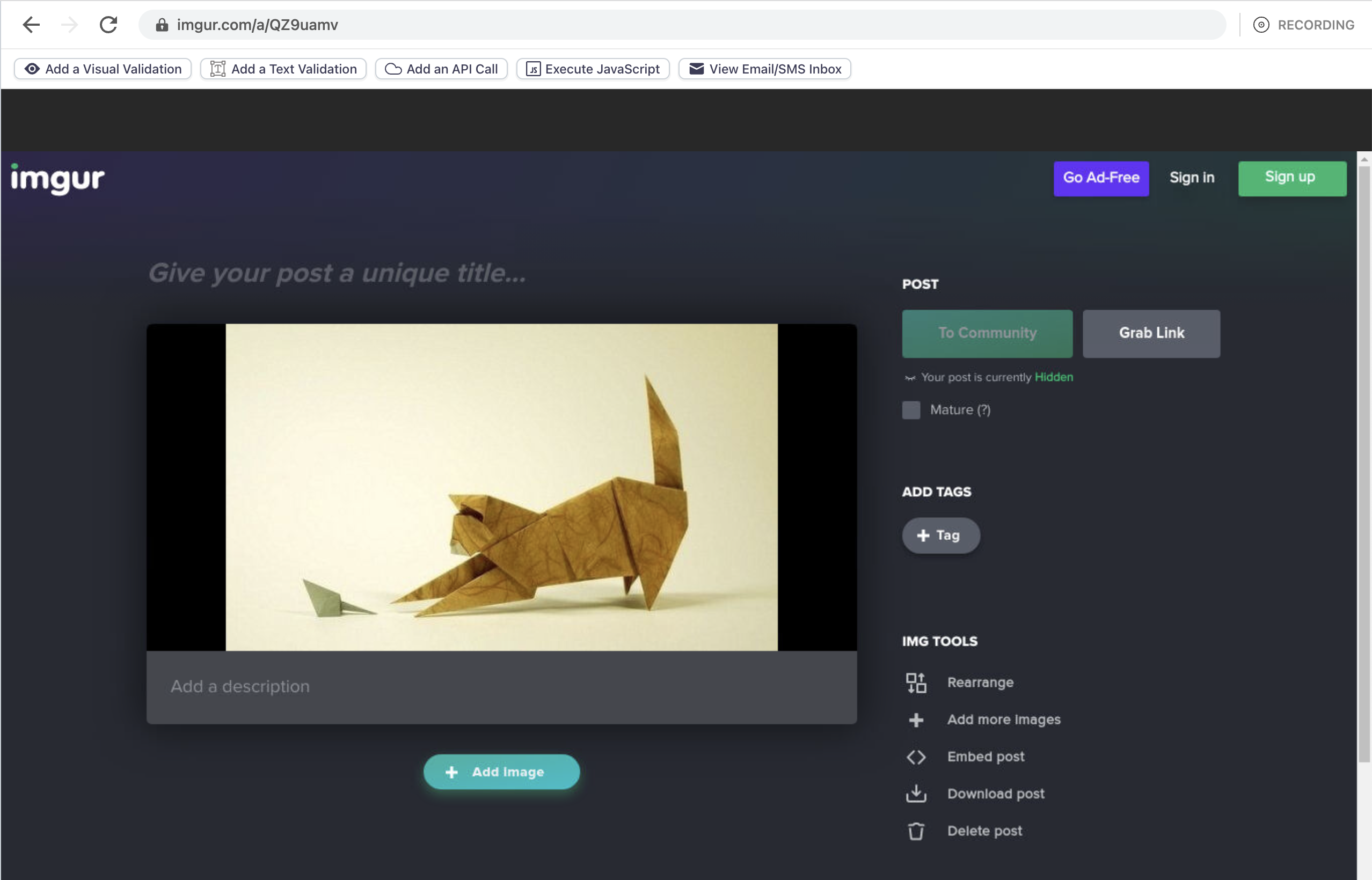

File Upload

When you click on a <input type="file"> element or interact with any element that would normally trigger a native filepicker modal, Reflect will intercept the action and will prompt you to select a file from your local filesystem.

After selecting a file and clicking Upload, we’ll inject your file into the recording session and save a copy of the file for use in subsequent test runs.

You can access upload files at any time by selecting a test run, choosing the test step containing the file upload, and clicking “Download File” from the Test Step Detail view.

Note

File uploads are capped at 10MB. If you require a higher limit, please email [email protected].

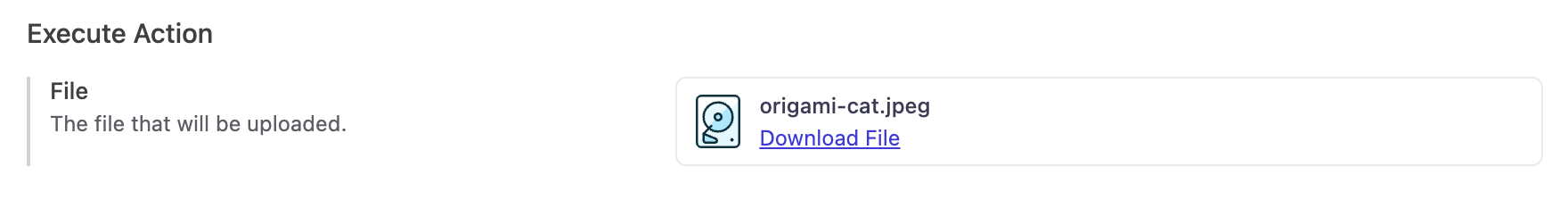

File Download

Reflect will automatically detect and validate files that are downloaded within a web session. When recording a test, a modal will be displayed whenever a file download is detected. Once the file has been successfully downloaded, you will be given the option to add the File Download step to the test:

During a test run, a File Download step will assert that a file is downloaded at that point in the run. By default, Reflect will assert that the downloaded file matches the URL, filename, and file size of the file that was originally downloaded at recording time. Each of these matching criteria can be customized or disabled entirely.

Native Alerts

Native alerts are modal dialogs that use the native OS chrome rather than custom-styled DOM elements. The following native alerts are detected and recorded by Reflect:

alert():Displays a message and a single ‘OK’ button.confirm():Displays a message and both an ‘OK’ and ‘Cancel’ button.prompt():Displays a message, an input box, and both ‘OK’ and ‘Cancel’ buttons.

Note

Dialogs defined within a beforeunload event callback, as described in this MDN article, are not currently supported.

Reflect validates both the presence of the modal dialog, as well as its text content and user selection. As such, Reflect automatically validates the correctness of these native OS alerts so that you do not need to use a Visual Validation on these dialog boxes, which could lead to false positive failures when executing tests across different browsers.

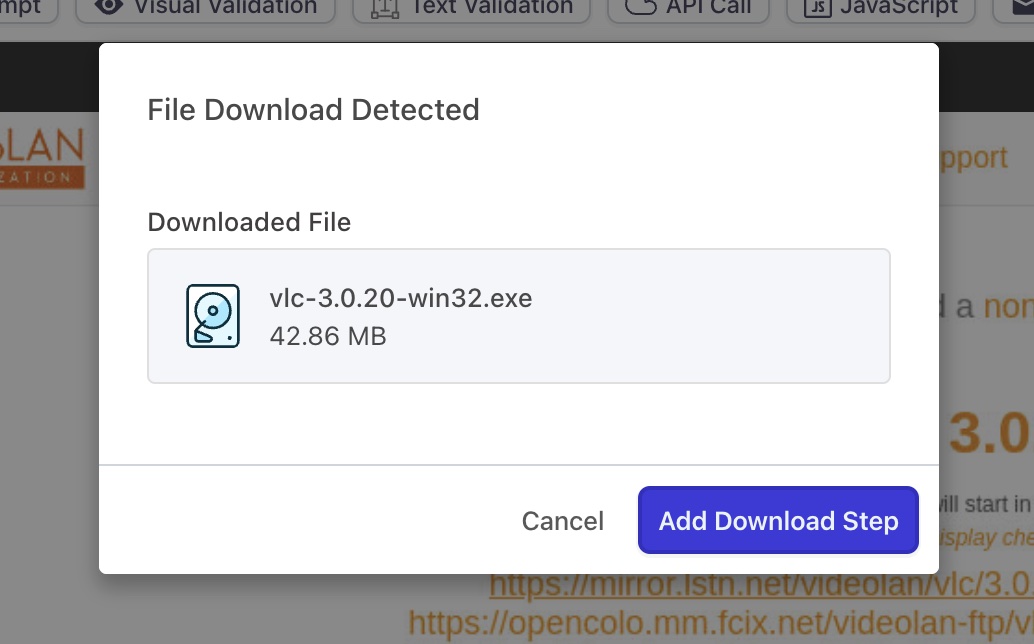

IFrames

Reflect includes robust support for iframes which is completely automated for you. When recording a test, Reflect detects if you are interacting with an element inside of an iframe. When it retrieves the selectors for elements within an iframe, it also retrieves the selectors that target the containing iframe.

When the test runs, Reflect first attempts to locate the containing iframe, and, once found, attempts to locate the selector of the interacted element that is inside of the iframe.

As iframes can be nested within other iframes, Reflect supports retrieving elements within nested iframes at an arbitrary level of depth.

Shadow DOM

If your application uses native Web Components, then you likely are using Shadow DOM. Shadow DOM is similar to iframes in that it prevents styling or Javascript from outside of the Web Component to interact with elements inside the Web Component. Sites utilizing Web Components are typically hard to test because targeting Shadow DOM elements is not straightforward. (Selenium for example does not have native support for Shadow DOM.) Interactions that occur inside Shadow DOM elements are captured by Reflect just like any normal element. Our approach works by first accessing the parent element of the Shadow DOM, then accesses the element within the Shadow DOM. Separate selectors are generated for both the parent and Shadow DOM, and we also support nested Shadow DOM elements.

Text Highlighting

Reflect will detect when you highlight text on the page and will automatically create a test step for that action. So, for example, if you were testing an online document editor, you could use Reflect to test behavior that relies on text highlighting, such as adding comments or annotations to a document after highlighting a subsection of text.

Video Camera

All test recordings and test runs that execute with the Chrome browser are configured to have a default virtual video device. Interactive applications such as video chat can read from this virtual device and use the looped video stream as if it were from a real user.