In TestComplete, you can use optical character recognition to find a screen area by the text it shows and simulate user actions on that area (for example, click it).

In keyword tests

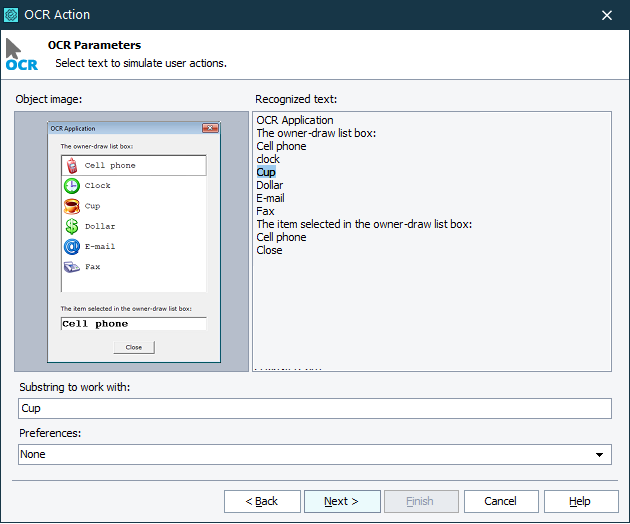

Use the OCR Action operation to locate the screen area that contains specific text and then simulate user actions over that area or over nearby areas:

-

Add the operation to your test.

-

Select the onscreen object on which you want to simulate user actions. TestComplete will recognize all the text in that object.

The selected object must exist in your system and must be visible on screen.

The selected object must exist in your system and must be visible on screen.Note: To specify an object in a mobile application, you can select it in the Mobile Screen window. Both the application under test, and the mobile device where the application is running must be prepared for testing in order for TestComplete to be able to access them.

To get the entire screen of your mobile device, you can use theMobile.Device.Desktopproperty. -

Select the needed text fragment. If there are several fragments that contain text, specify the one you need:

-

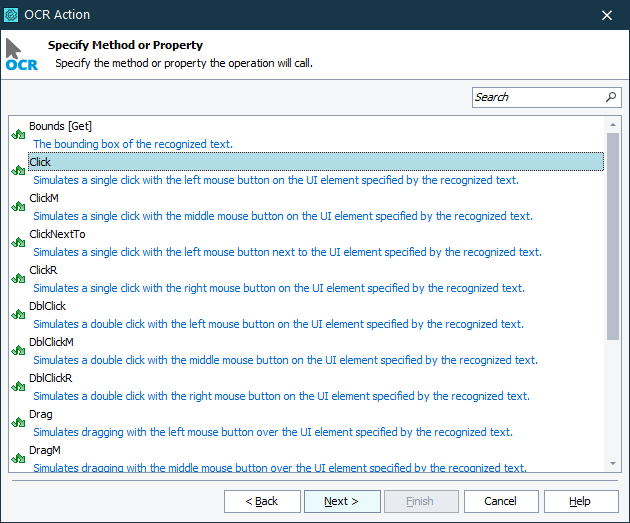

To simulate a user action over the screen area that contains the fragment, select the appropriate method:

-

Specify method parameters, if needed.

-

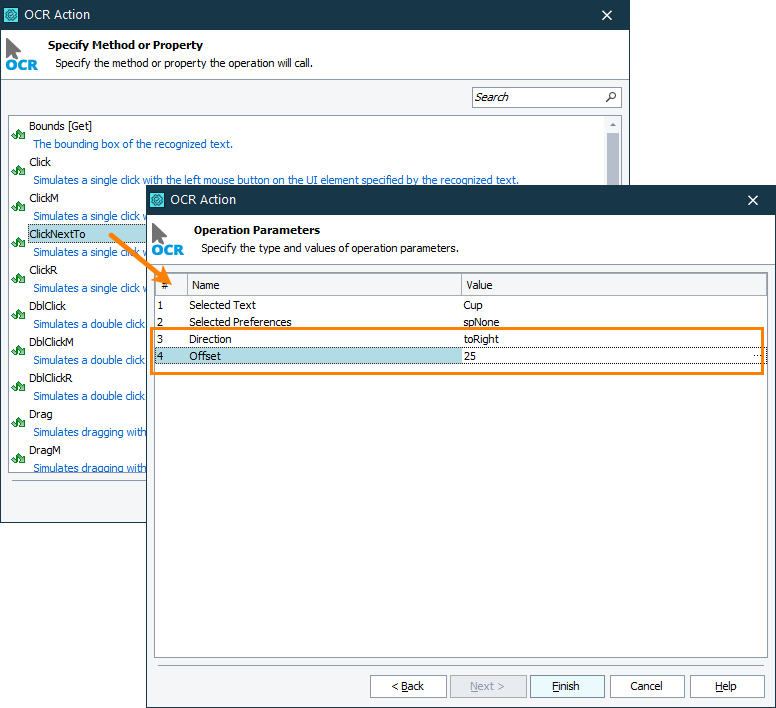

To simulate a mouse click (or a touch) over the area next to the text fragment, select the

ClickNextTo(orTouchNextTo) method. To simulate keyboard input in the area next to the text fragment, select theSendKeysmethod. Specify the target area location:

In scripts

-

Use the

OCR.Recognizemethod to recognize the text the object or screen area contains. -

Use the

BlockorBlockByTextproperty to get the area that contains a specific text fragment. -

To simulate user actions over the screen area that contains the text fragment, call the appropriate method. For example:

JavaScript, JScript

OCR.Recognize(Aliases.myApp.wndForm).BlockByText("*clock*", spLargest).Click();Python

OCR.Recognize(Aliases.myApp.wndForm).BlockByText("*clock*", spLargest).Click()VBScript

Call OCR.Recognize(Aliases.myApp.wndForm).BlockByText("*clock*", spLargest).ClickDelphiScript

OCR.Recognize(Aliases.myApp.wndForm).BlockByText('*clock*', spLargest).Click;C#Script

OCR.Recognize(Aliases["myApp"]["wndForm"])["BlockByText"]("*clock*", spLargest)["Click"](); -

To simulate a click (or a touch) over the screen area next to the area that contains the text fragment, call the

ClickNextTo(orTouchNextTo) method and specify the target area location. For example:JavaScript, JScript

OCR.Recognize(Aliases.myApp.wndForm).BlockByText("*clock*", spLargest).ClickNextTo(toRight, 15);Python

OCR.Recognize(Aliases.myApp.wndForm).BlockByText("*clock*", spLargest).ClickNextTo(toRight, 15)VBScript

Call OCR.Recognize(Aliases.myApp.wndForm).BlockByText("*clock*", spLargest).ClickNextTo(toRight, 15)DelphiScript

OCR.Recognize(Aliases.myApp.wndForm).BlockByText('*clock*', spLargest).ClickNextTo(toRight, 15);C#Script

OCR.Recognize(Aliases["myApp"]["wndForm"])["BlockByText"]("*clock*", spLargest)["ClickNextTo"](toRight, 15);To simulate keyboard input in the screen area next to the text fragment, call the

SendKeysmethod and specify the keys to be pressed and the target area location. For example:JavaScript, JScript

OCR.Recognize(Aliases.myApp.wndForm).BlockByText("*item*", spLargest).SendKeys("test", toRight, 15);Python

OCR.Recognize(Aliases.myApp.wndForm).BlockByText("*item*", spLargest).SendKeys("test", toRight, 15)VBScript

Call OCR.Recognize(Aliases.myApp.wndForm).BlockByText("*item*", spLargest).SendKeys("test", toRight, 15)DelphiScript

OCR.Recognize(Aliases.myApp.wndForm).BlockByText('*item*', spLargest).SendKeys('test', toRight, 15);C#Script

OCR.Recognize(Aliases["myApp"]["wndForm"])["BlockByText"]("*item*", spLargest)["SendKeys"]("test", toRight, 15);

Supported user actions

On on-screen areas that TestComplete recognizes by their text contents, you can simulate the following user actions:

In Desktop and Web Applications

-

Clicks and double-clicks:

By default, these methods simulate clicks at the center of the specified screen area. To simulate clicks at a specific point of the area, call the methods with appropriate parameters.

This method simulates a click at the point located at the specified distance to the left, to the right, at the top or at the bottom of the recognized text block.

-

Hover mouse:

-

Drag:

In Mobile Applications

-

Touches and long touches:

LongTouch

Note: On Android devices, the HoldDuration parameter of the method is not supported and will be ignored.By default, these methods simulate touches at the center of the specified area. To simulate clicks at a specific point of the area, call the methods with the appropriate parameters.

This method simulates a touch at the point located at the specified distance to the left, to the right, at the top or at the bottom of the recognized text block.

-

Drag:

In All Applications

-

Keyboard Input:

This method simulates keyboard input in the screen area located at the specified distance to the left, to the right, at the top or at the bottom of the recognized text block.

Connecting to Mobile Device Clouds and Opening Testing Sessions

Preparing for Android Testing (Legacy Mobile Support)

Connecting to Mobile Device Clouds and Opening Testing Sessions

Preparing for Android Testing (Legacy Mobile Support)

See Also

Optical Character Recognition

Optical Character Recognition

Mobile Screen Window

Selecting an Object on the Mobile Screen (Android Testing)

Select an Object on the Mobile Screen (iOS Testing)